The tools of Zebra Aurora Vision software

are highly optimized for modern multi-core processors with SSE/AVX technology.

The table below shows the results of a vision software performance benchmark.

| Filter | Zebra Aurora Vision Studio 4.12 | Another product | OpenCV 4.2 |

|---|---|---|---|

| Image negation | 0.030 ms | 0.032 ms | 0.025 ms |

| Add two images (pixel by pixel) | 0.029 ms | 0.047 ms | 0.036 ms |

| Image difference (pixel by pixel) | 0.036 ms | 0.045 ms | 0.030 ms |

| RGB to HSV conversion (3xUINT8) | 0.127 ms | 1.026 ms | 0.129 ms |

| Gauss filter 3x3 | 0.031 ms | 0.035 ms | 0.037 ms |

| Gauss filter 5x5 | 0.033 ms | 0.073 ms | 0.052 ms |

| Gauss filter 21x21 (std-dev: 4.3) | 0.311 ms | 0.355 ms | 0.240 ms |

| Mean filter 21x21 | 0.100 ms | 0.102 ms | 0.291 ms |

| Image erosion 3x3 | 0.030 ms | 0.035 ms | 0.050 ms |

| Image erosion 5x5 | 0.030 ms | 0.036 ms | 0.059 ms |

| Sobel gradient magnitude (sum) | 0.032 ms | 0.035 ms | |

| Sobel gradient magnitude (hypot) | 0.034 ms | 0.040 ms | |

| Threshold to region | 0.043 ms | 0.076 ms | |

| Splitting region into blobs | 0.119 ms | 0.206 ms | |

| Bilinear image resize | 0.131 ms | 0.108 ms | 0.052 ms |

The above results correspond to 640x480 resolution, 1xUINT8 on an Intel Core i5 - 3.2 GHz machine. In order to elimate the non-random component of measurement error, the repetition count of each operation was increased by factor of 10, 30 times. It leads to the following repetition sequence: 10, 20, 30, ..., 300. Later for the obtained execution times a straight line was fitted. In this approach constant error related to the start and stop of measurements is reflected by the line shift while the execution time is expressed in line slope. To increase the precision of measurements big images were tested and the results were normalized. Note also that the functions from the different libraries do not always produce exactly the same output data.

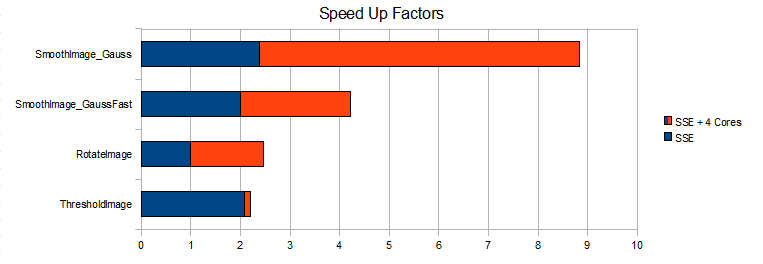

Filters of Zebra Aurora Vision Studio are optimized for SSE/AVX/NEON technology and for multi-core processors. Speed-up factors that can be achieved with these techniques are however highly dependent on the particular operator. Simple pixel-by-pixel transforms after SSE-based optimizations already reach memory bandwidth limits. On the other hand, more complex filters such as Gauss smoothing can achieve even 10 times lower execution times than with C++ optimizations only.

The table below demonstrates how well different processors perform when executing our software tools (the higher the better). You can use it as a reference when choosing hardware for your application.

| Benchmark category | Overall result | |||||||

|---|---|---|---|---|---|---|---|---|

| Device description | Executor Engine | Image processing | Image analysis | Region processing | Applications | |||

|

54.9 | 32.7 | 41.1 | 61.7 | 53.1 | 48.7 | ||

|

54.9 | 79.4 | 87.1 | 108.2 | 105.4 | 87.0 | ||

|

100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | ||

|

193.5 | 204.2 | 157.3 | 143.6 | 167.3 | 173.2 | ||

|

112.3 | 213.4 | 164.8 | 218.7 | 174.6 | 176.7 | ||

|

311.6 | 136.8 | 171.6 | 210.0 | 212.0 | 208.4 | ||

|

427.8 | 534.6 | 303.6 | 295.9 | 352.6 | 382.9 | ||

|

507.6 | 593.4 | 346.8 | 345.9 | 393.1 | 437.4 | ||

|

545.3 | 628.1 | 355.1 | 324.7 | 403.6 | 455.0 | ||

|

554.6 | 645.5 | 359.0 | 360.4 | 416.5 | 467.2 | ||

|

611.6 | 667.6 | 366.6 | 356.9 | 421.3 | 484.8 | ||

|

628.3 | 678.7 | 380.5 | 378.9 | 420.8 | 483.5 | ||

|

641.8 | 710.0 | 365.9 | 366.8 | 416.3 | 500.2 | ||

|

640.2 | 699.1 | 380.9 | 378.8 | 412.6 | 502.3 | ||

|

663.7 | 794.0 | 395.7 | 390.2 | 458.1 | 540.3 | ||

|

684.3 | 830.1 | 422.0 | 406.8 | 492.6 | 567.1 | ||

|

798.2 | 887.5 | 474.7 | 461.1 | 550.1 | 634.3 | ||

|

667.9 | 1407.1 | 535.9 | 439.0 | 419.6 | 693.9 | ||

|

862.5 | 1364.7 | 587.8 | 491.3 | 594.3 | 780.1 | ||

Higher value means better performance.

The test measures execution time for constant number of operations. Results are normalized.

Back to top

The table below demonstrates how well different hardware configurations perform when executing our Deep Learning tools (the higher the better).

You can use it as a reference when choosing hardware for your application.

| Hardware configuration | Deep Learning Network | Overall result | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Classify Object (CO) | Detect Anomalies 2 (DA2) | Detect Anomalies 1 Global (DA1G) | Detect Anomalies 1 Local (DA1L) | Detect Features (DF) | Instance Segmentation (IS) | Locate Points (LP) | ||||

|

35.7 | 5.7 | 24.0 | 6.3 | 6.9 | 15.0 | 7.0 | 7.4 | ||

|

118.2 | 30.1 | 64.3 | 12.4 | 13.6 | 92.7 | 18.4 | 20.2 | ||

|

122.8 | 26.9 | 58.2 | 14.8 | 13.3 | 83.9 | 15.0 | 20.5 | ||

|

58.9 | 26.6 | 59.1 | 18.8 | 13.6 | 88.3 | 16.3 | 22.6 | ||

|

186.0 | 32.6 | 75.6 | 17.6 | 14.9 | 102.9 | 19.1 | 24.4 | ||

|

164.3 | 34.9 | 82.6 | 22.2 | 18.9 | 105.3 | 21.6 | 29.1 | ||

|

245.5 | 43.9 | 70.5 | 40.3 | 43.2 | 172.7 | 68.6 | 53.1 | ||

|

68.5 | 135.1 | 108.5 | 94.8 | 85.4 | 96.5 | 69.2 | 96.5 | ||

|

102.2 | 99.1 | 92.8 | 102.8 | 99.5 | 97.8 | 100.0 | 99.6 | ||

|

100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | ||

|

101.6 | 103.9 | 90.0 | 101.0 | 100.5 | 96.0 | 105.8 | 100.5 | ||

|

82.7 | 136.0 | 90.9 | 129.6 | 133.6 | 106.3 | 134.6 | 124.1 | ||

|

102.7 | 157.7 | 135.4 | 142.8 | 148.8 | 133.1 | 134.7 | 143.3 | ||

|

109.0 | 158.9 | 108.4 | 161.5 | 167.7 | 127.3 | 161.4 | 150.5 | ||

|

99.4 | 192.5 | 167.7 | 173.8 | 182.4 | 155.5 | 168.6 | 173.7 | ||

|

99.7 | 244.3 | 201.7 | 249.8 | 556.0 | 172.8 | 511.7 | 259.9 | ||

|

161.6 | 276.8 | 134.3 | 274.2 | 569.7 | 175.8 | 594.9 | 270.8 | ||

Higher value means better performance.

The test measures execution time for selected Deep Learning tools. Results are normalized.

Back to top